COSC174 Project Proposal

Machine Learning with Prof. Lorenzo Torresani

Rui wang, Tianxing Li, and Jing Li

Jan 24th, 2012

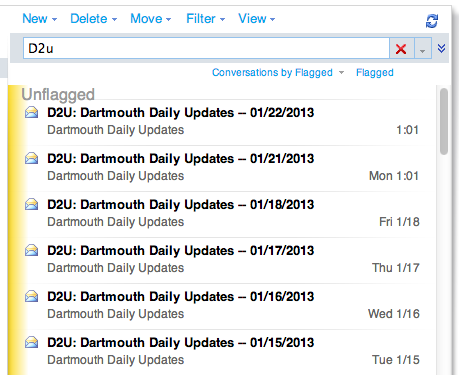

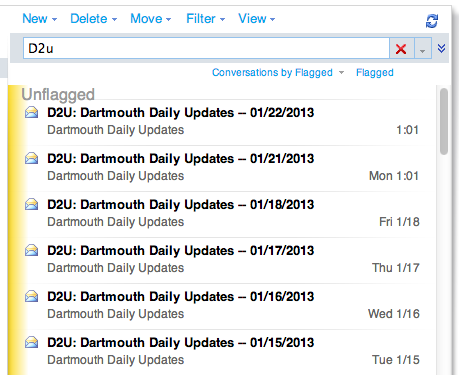

A daily email news digest sent to faculty, staff and students, called 'Dartmouth Daily Updates', provides us information about what is happening on campus, such as seminars, art performances, flu shots, traditional events, etc.. However, imagine you are about to go to bed at 1am, or you quickly check your email before starting your work in the early morning, few of us will pay attention to it very well to search the useful information from such poorly classified large information pool. Our aim is to provide a dreaming application that will classifies the text to a predefined set of generic classes, for example talks, parties, arts, etc., for people to get their interested information at their first sight.

Traditional classification methods such as 'Bag-of-words' have limitations for our case, since most of events within the emails are written as short text messages. As short text messages do not provide sufficient word occurrences, these traditional methods could not classifies the information correctly and efficiently. Rather than only gathering the information from the text messages, our novel classification method also contains some background knowledge like time of the events and sender profile that belongs to the college scenario. Our idea is to use both of these features to get a text classification, so we propose the following methods.

1. Identifying Classifications:

We are going to identify possible event's classifications before classifying each daily update item, and it needs to be done manually. For achieving that, daily emails from Aug 2012 to today will be downloaded and manually looked one by one to build the labels for each event, such as art performance, sport activity, talks and seminars, parties, etc.. The labels will need to satisfy both sensitivity and specificity.

2. Feature Expansion:

Since the texts from 'Dartmouth Daily Updates' that we will be dealing with are primarily short text which traditional methods are not very fit to use, we will need to expand our features using sender profiles, location information, and background information. Entries within the user profiles and event's location information could be used as features directly because they may give information about which department would hold a given events hence indicate what kind of event they could be. Background knowledge, e.g. Wikipedia pages, can be used to expand our text features by exploiting the co-appearance of terms. We expect to identify important term from the event descriptions with the help of background knowledge.

3. Classifiers

3.1 Classification Using SVM:

SVM has been widely used to solve text classification problems. Unlike traditional text classification problems, in which only text features were considered, we also need to use non-text features that mentioned to identify the classifications. Text should be preprocessed to omit stopwords and to do word stemming. Then it should be converted to a vector space in which every non-repeated word corresponding to a single dimension. The value of each entry is the words term frequency inverse document frequency(TFIDF). Features other than text will be appended to the vector. The value of these features could be discrete representing certain entities. We need to figure out how this combination of two different types of features could impact the performance of the classifier.

3.2 Classification Using LSI:

LSI (Latent Semantic Indexing) [Deerwester et al] overcomes the problems of lexical matching by using statistically derived conceptual indices instead of individual words for retrieval. This classification mainly has three advantages for our project: synonymy, polysemy, and term dependence.

3.3 Classification Using LDA:

LDA(latent dirichlet allocation) is a simple model, which has been widely used in topic model area. Although we view it as a competitor to method like LSI in the setting of dimensionality reduction for document collections and other discrete corpora, it intend to be highly illustrative of the way in which probabilistic models can be scaled up to provide useful inferential machinery in domains involving multiple levels of structure. The principal advantages of LDA include their modularity and their extensibility. As a probabilistic module, LDA can be readily embedded in a more complex model --- a property that is not possessed by LSI.

4 Multi-Label Classification:

An daily email digest text documents usually contains information of multiple categories. We will not only train the data using an daily email which has multiple labels representing different categories, but will also train the data using each piece of information of an daily email that with single labels. Therefore, there are mainly two methods concerning this issue: problem transformation methods which transform the problem into a set of single label classification problem, and algorithm adaption methods which directly perform multi-label classification. We also need to consider that musical performance can be labeled as a performance announcement document for its purpose and music for its content.

Our aim is to compare different classifiers and also compare single-label and multi-label classification methods to find out which classifier or/and method gives the best performance.

Our data set will be gathered by ourselves, which consists of all 'Dartmouth Daily Updates' emails since August 2012 plus all the links url that attach to these emails.

Jan. 8th - Jan. 15th:

Developing our ideas for a machine learning project.

Jan. 15th - Jan. 27th:

Writing our proposal.

Reading through all 'Dartmouth Daily Updates' to find several classifications labels.

Jan. 27th - Feb. 3th:

Extracting all features from the emails, e.g., sender profiles, location and time of the events.

Preprocessing the emails using these features.

Feb. 3th - Feb. 10th:

Starting to train our classifier model with the features we developed, using SVM.

Feb. 10th - Feb. 17th:

Tweaking our model and test our model by using more data from new Dartmouth Daily

Update emails.

Feb. 17th - Mar. 7th:

Writing our final report and making the poster for presentation.

[1]Bharath Sriram, David Fuhry, Engin Demir, Hakan Ferhatosmanoglu, Murat Demirbas. Short Text Classification in Twitter to Improve Information Filtering.

[2]Xia Hu, Nan Sun, Chao Zhang, Tat-Seng Chua. Exploiting Internal and External Semantics for the Clustering of Short Texts Using World Knowledge.

[3]Sarah Zelikovitz. Transductive LSI for Short Text Classification Problems.

[4]Barbara Rosario. Latent Semantic Indexing: An overview.

[5]Deerwester, Dumais, Furnas, Lanouauer, and Harshman. Indexing by latent semantic analysis.

[6]David M. Blei, Andrew Y. Ng, Michael I. Jordan. Latent Dirichlet Allocation.